AI year in review

Categories:

This has been a particularly transformative year in AI. The launch of ChatGPT late last year has sparked a race in both industry and research to catch up and make the best use of this technology. This in turn translated into weekly if not daily releases of new research papers, models and products, a rate of development which felt hard to keep up with. This post will try to summarise the trends we are observing and make some predictions for next year.

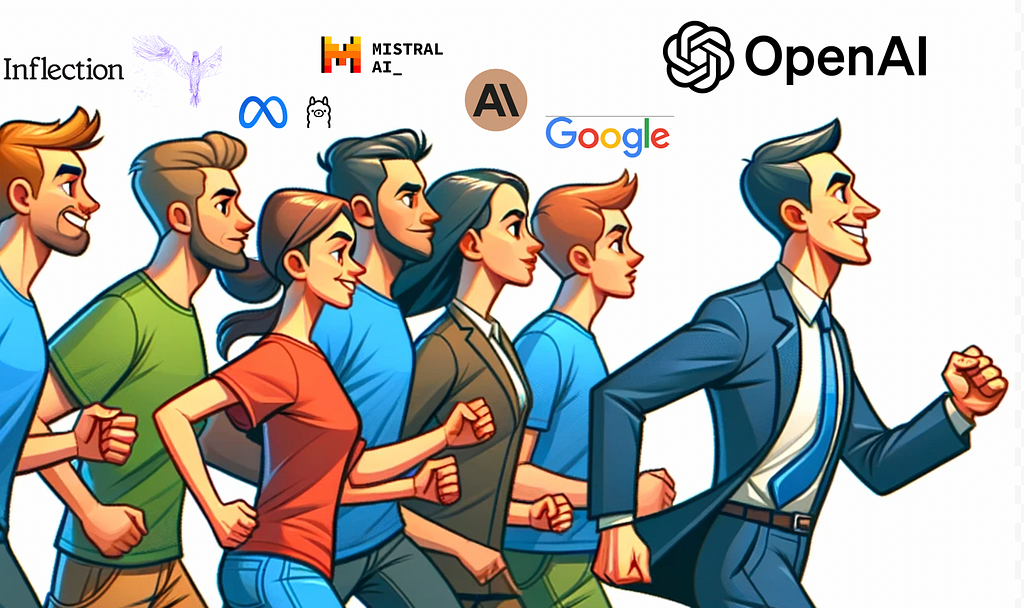

Progress is dictated by OpenAI

OpenAI is leading the way in regards to what is possible from today’s AI. GPT4 was a significant leap in capabilities, improving performance from its predecessor GPT3.5 by approximately 10 percentage points in academic benchmarks, while surpassing specialised models in many cases, and exhibiting human level performance in tests designed to be taken by experts in various fields like medicine, law etc.

GPT4V(ision) was another significant leap, improving by at least 10 percentage points from the previous similar approach (Flamingo) and demonstrating for the first time in vision a really strong zero shot performance i.e. without specialised training, that makes it useful out of the box in everyday tasks that require visual input.

The power of those models was unleashed when coupled with a conversational interface as we all realised with ChatGPT. Along the same lines, enabling GPT4 to access the internet and run code and call functions, abilities introduced by OpenAI has opened up new opportunities , to the extent that it’s not very hard to now imagine how things could also go wrong.

OpenAI also released Whisper 3 and its own Text To Speech (TTS) service, outcompeting providers that have been collecting data and developing these speech to text and text to speech services for years. They also released Dall⸱E 3 which seems to be particularly good at following very precise instructions for generating images. And while these releases on their own were impressive, they are so much more useful in the context of ChatGPT allowing you to have a conversation with your voice and create images by providing feedback.

On the business side, OpenAI also set the tone of the conversation this year, rapidly responding to user requests. For example, the GPT4 context window has gone from 8k all the way to 128k, its price reduced by 3x, and there is an Enterprise version provided natively by OpenAI or through Microsoft Azure that offers better governance for enterprise organizations.

Playing catchup

To a certain extent, everyone else has been playing catchup with OpenAI. The goalposts set by GPT4 still stand, even though you can argue that Gemini Ultra might have moved it by a few inches. Many efforts this year have been about replicating the performance and abilities of ChatGPT powered by GPT3.5 and GPT4. One year in, we can argue that the GPT3.5 level has been achieved in both the open source domain by Llama (2 70b), Falcon (180b) and Mixtral (8x7b) and closed source by Inflection 2, Gemini pro and Claude 2. As for GPT4, only Gemini Ultra claims to be better with Claude 2 being a close second. Depending on when you define the OpenAI models to have been developed it seems that it takes open source 6–12 months to close the gap from closed source.

Another trend that gained a lot of attention this year was developing smaller models with equal capabilities. These smaller models like Alpaca, Vicuna and later WizardLM, phi, and Orca still require a larger model to teach them (a process usually referred to as distillation), but they demonstrate that it’s possible to have the performance of a very powerful model whilst being much smaller and cheaper to run. What’s interesting is that those models are being taught using synthetic data produced from larger models, most of the time GPT4, which elevates the role synthetic data will play in the future of AI.

Finally, there was a big push towards making it easier to interact with models the size of GPT3.5 and GPT4. Quantising those models in 8 and 4 bits reduced 2x and 4x the memory requirements to run them, and importantly came at almost no loss of performance. Using Paged and Flash Attention reduced the time to run those models by 5x. A new technique was developed to adapt those large models to specific use cases called Low Rank Adaptation (LoRA), requiring the training of only a few additional parameters, not very different from training the previous generation of models. The development of a technique called sLora made it possible to serve multiple of these adapted layers in the same hardware, which made the economics of running multiple adapted LLMs very similar to the cost of smaller models. It also became standard practice to tune those models using human or AI preferences and an alternative method to reinforcement learning from preference data, which was pioneered by OpenAI, emerged called Direct Preference Optimization (DPO) making it much easier to perform this step.

Products powered by AI

Unless you have been living under a stone this year, you probably have seen at least one instance of a product feature powered by AI. From Notion AI that acts as auto complete to Intercom’s Fin bot that is advertised as being able to handle around half of the customer support requests received automatically. Many of those features seem to either offer a productivity boost to the user or a cost reduction to the company. Possibly the best example of this is Github Copilot, a widely used AI completion tool for programmers that seems to halve the time it takes to develop software.

One solution that has gained a lot of popularity this year is Retrieval Augmented Generation (RAG). This is because many companies want to develop a ChatGPT-like experience on top of their data. As an example, McKinsey developed Lili an internal assistant on top of their proprietary data to help consultants tap into the knowledge of the firm. RAG is also proving popular as a solution to the problem of hallucinations since it grounds the response of the model to the context retrieved. The UK government for example has announced a RAG chatbot to enable citizens to interact with the gov.uk information, a possibility that we talked about as early as March.

Unlike the previous examples that work on the existing workflows businesses and users have, it’s important to note how AI is enabling new ways of working and learning. Duolingo and Khan academy, for example, demonstrated the idea of a personal tutor or coach to help you learn a language or any STEM topic. Github also introduced Copilot Chat which is a completely new way of developing software using AI as your co-programmer.

What’s next

It’s probably safe to assume that we are going to see more models catching up with GPT4 next year. At the same time, we will probably see the next leap from OpenAI or Google. That next leap will most likely be multimodal in one way or another and it may be better than specialised models in performance but of course not in cost.

It’s very likely that we will see progress towards more useful assistants. This means that ChatGPT and AI in general will be able to complete more tasks that require several steps without supervision. We will become increasingly familiar with the idea of having an AI companion, not very different to how it’s hard to picture a world without Google today.

The biggest limitation of current AI is the fact that it’s outputs contain hallucinations (non factual statements) so this is another area we will see a lot of progress. We are already seeing progress with each iteration of those models being better at producing factual responses. Continuing on that trend means we will have ever more trustworthy systems in our hands which makes them much more useful and powerful.

Lastly, current AI can write, see, hear and speak, but can it walk? We will definitely see progress on the embodied AI front, similar to progress being made from Tesla on the Optimum line. Tesla is not alone in pursuing embodied AI applications, Google is also very active in this area, with Palm-E and other initiatives. Self driving could also benefit from those developments since driving can be considered an interplay between a body, the car, and a brain, the driver. Wayve is making some progress on redefining self driving using LLMs and the current AI technology. Robotics and Embodied AI might prove more difficult to crack though, it’s worth noting that OpenAI dismantled its robotics team only two years ago, so there seems to be something about robotics that does not adhere to the scaling laws that are powering their other breakthroughs.

AI year in review was originally published in MantisNLP on Medium, where people are continuing the conversation by highlighting and responding to this story.