Applications of LLMs in Healthcare Research

Categories:

Introduction

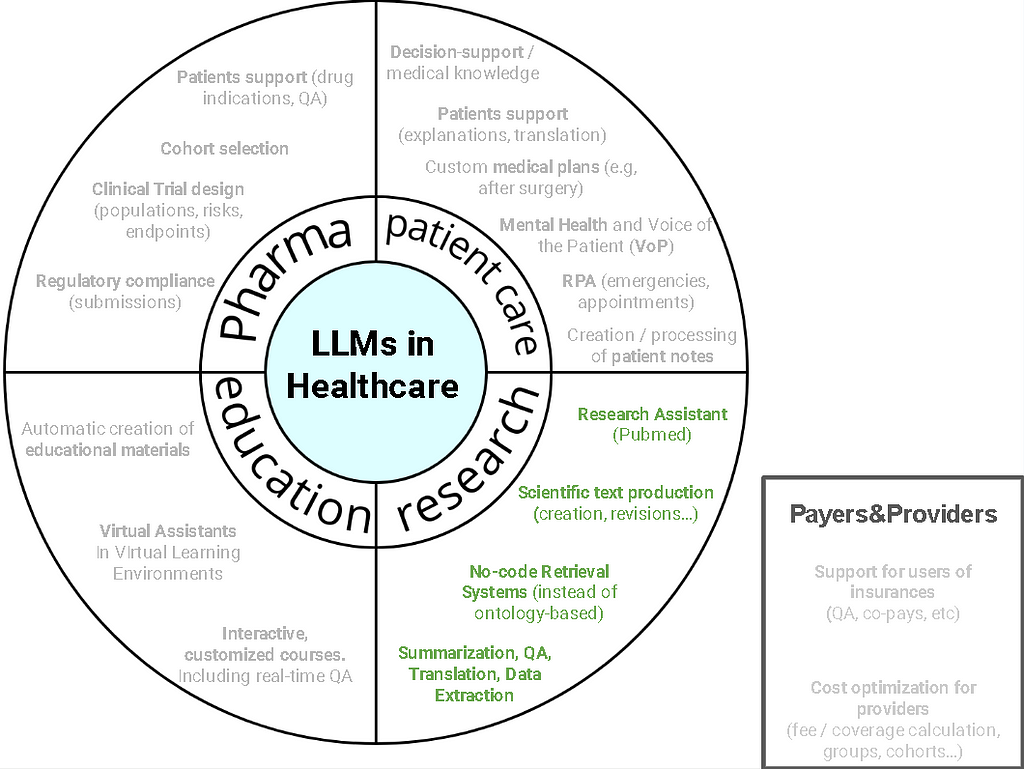

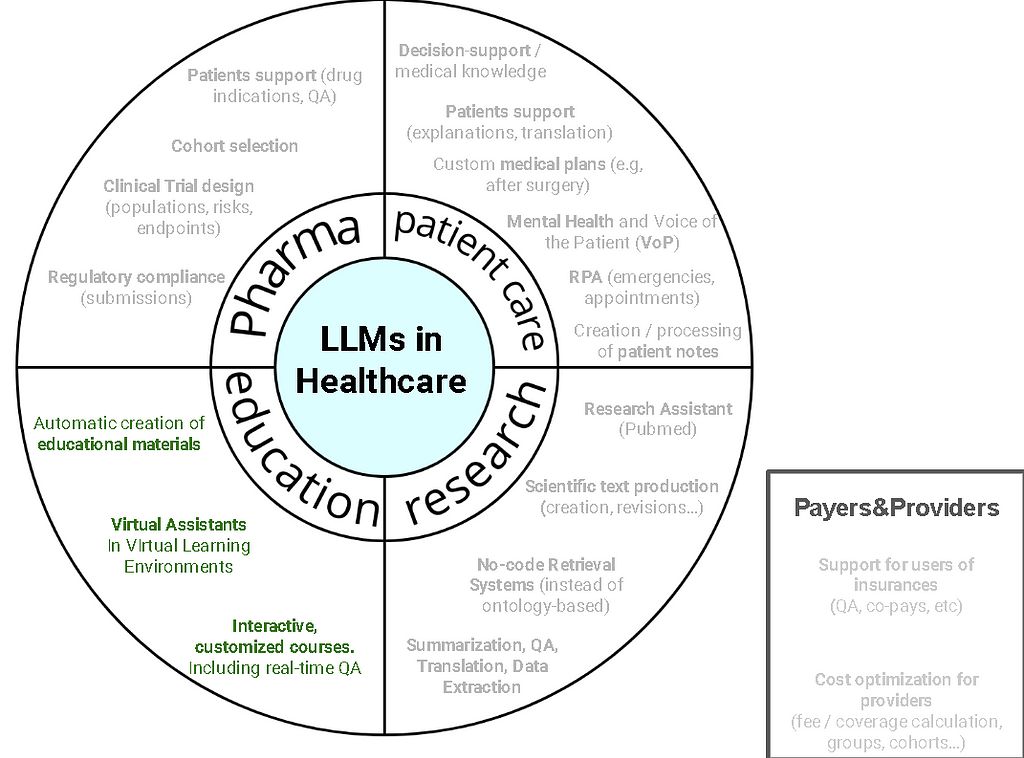

In the dynamic landscape of healthcare, the integration of cutting-edge technologies is revolutionizing every facet of the industry. One such groundbreaking innovation is the application of Large Language Models (LLMs), which harness the power of natural language processing to reshape healthcare, medical and patient care, pharmaceutical practices, healthcare education, research endeavors, and the intricate relationships between payers and providers.

This article delves into the myriad ways LLMs are making waves in the Healthcare Research sector. Offering a comprehensive exploration of their transformative impact, Research Assistants and natural language-based Retrieval Systems can help you with literature retrieval, cross references and citations, automatisation of the paper publication, data mining based on queries and human languages, abstract creation or proofreading, among others.

Enhancing Medical Research through Large Language Models

Large Language Models (LLMs) play a pivotal role in advancing medical research by offering invaluable support in various aspects of the scientific process. Research assistants benefit from the natural language processing capabilities of LLMs, as these models can assist in literature question / answering, retrieval, review, data extraction, and even generating preliminary insights. Moreover, LLMs enhance the development of retrieval systems by enabling more effective and context-aware searches across extensive databases. Their ability to understand and generate human-like text facilitates seamless communication between researchers and the model, leading to enhanced collaboration and knowledge sharing within the scientific community.

1. Research Assistants

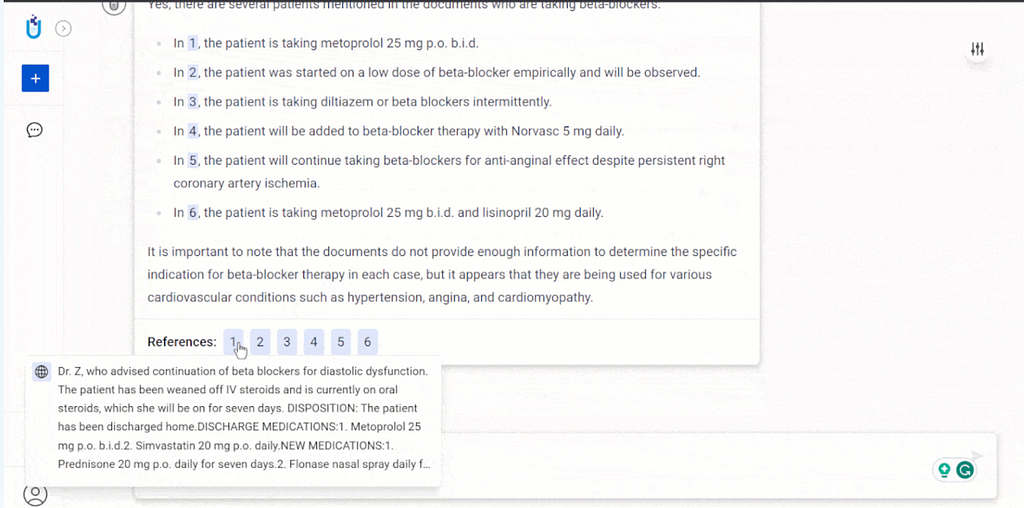

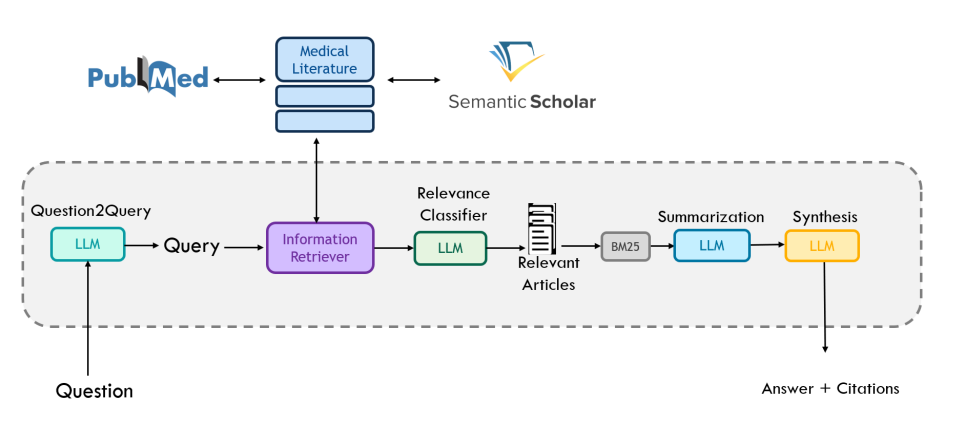

A powerful Clinical Research Assistant leveraging Large Language Models (LLMs) is essential in managing the overwhelming volume of biomedical research papers, surpassing traditional question-answering frameworks.

Such assistants are already being created in the Healthcare AI space, and include real-time access to prebuilt knowledge bases (Pubmed, MedArxiv, Clinical Trials), in**-house, user-specific documents** with a Retrieval Augmented Generation (RAG) approach and even any **structured data** (tables, spreadsheets, etc) the user may have.

But we can go beyond that: the creation of Intelligent Agents powered by LLMs allow not only to work on top of textual documents and ask about them, but also carry out complex custom queries, which may require Inclusion of data from in-house relational SQL databases.

One of such assistants is John Snow Labs Medical Chatbot. Let’s see what it answers to the example question: What is the dry eye disease?

Figure 1: John Snow Labs Medical Chatbot, leveraging PubMed and local documents in RAG-mode, to assist in medical research

As John Snow Labs team states:

… one of the main concerns of the Research Assistants is Compliance*. They should be built in such a sense that they guarantee:*

- truthfulness*, prioritizing trustworthy sources, and mitigating hallucinations;*

— accuracy, surpassing general-purpose LLMs with document splitting, reranking, post-filtering, and similarity search;

- explainability, through resource citation in answer generation; security with air-gapped deployment and on-premise functionality without external API calls;

- and low latency / processing times*.*

2. Scientific Text Production

LLMs play a crucial role in streamlining the documentation process for Medical Scientific Research publications. These advanced language models leverage natural language processing capabilities to enhance the efficiency and accuracy of content creation and documentation. By employing LLMs, researchers and medical professionals can benefit in many ways. Automated section generation can automate the generation of various sections of a research paper, including the abstract and the conclusions. This not only accelerates the writing process but also ensures consistency in language and format. LLMs can also assist in proofreading and maintaining high-quality documentation by providing real-time suggestions for grammar, syntax, and writing style. This helps researchers adhere to publication standards and ensures that the content is well-structured and coherent. In addition, LLMs can aid in the interpretation of complex scientific data by generating concise and coherent explanations. Researchers can input raw data, and LLMs can assist in translating it into meaningful insights, making it easier to communicate findings effectively.

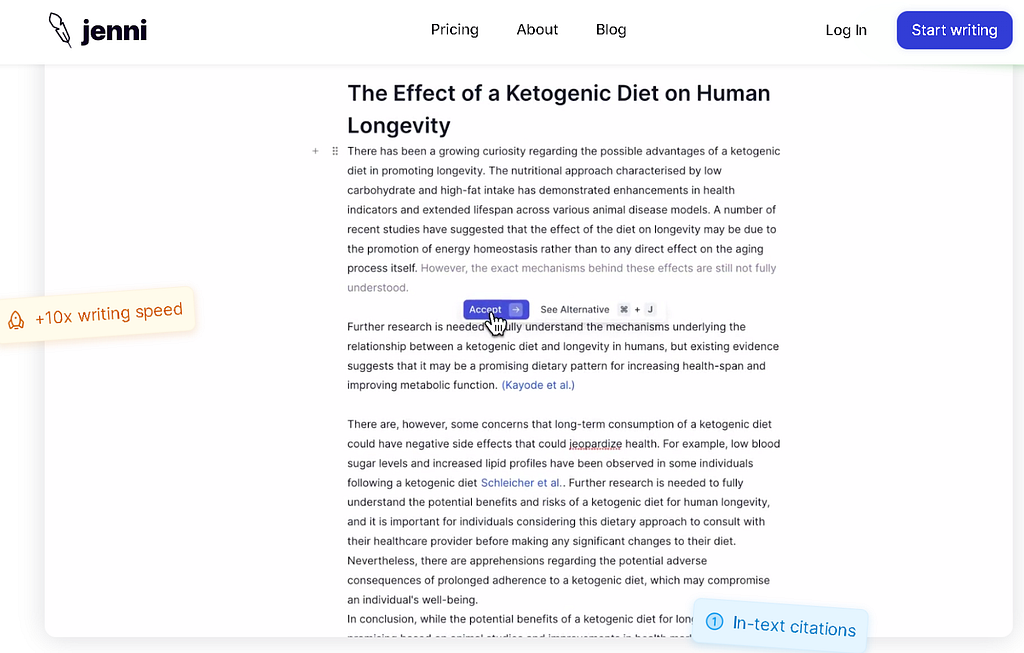

There are several tools which guide the publication and paper writing, and which several users in the Medical Industry. This is an example of Jenni.ai, which showcases on their main webpage some of the features we described so far, including automatic completion or creation of sections, quality assurance, etc.

Figure 2: Write medical publications using Jenni.ai

As we discussed above, LLMs excel in conducting automated literature reviews by extracting relevant information from a vast array of scientific articles. This capability streamlines the process of synthesizing existing knowledge and incorporating reviews of external literature into the research paper. And about references and external literature — cross-Referencing and citations is something LLMs can help achieve too. By assisting in the tedious task of cross-referencing and citation formatting, researchers can ensure the accuracy and completeness of their reference lists, saving time and reducing the risk of errors.

Figure 3: John Snow Labs Medical Chatbot “Literature Review” feature

All of these advancements ultimately empower researchers to focus more on the scientific aspects of their work while delegating tedious and bureaucratic documentation work to LLMs.

3. Retrieval Systems without keywords or encoded concepts

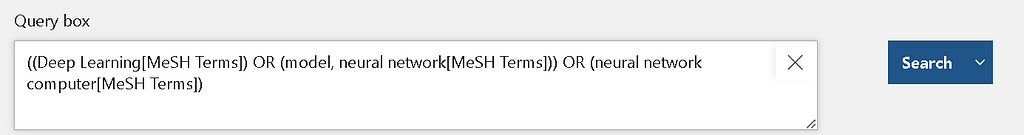

Now, let’s imagine you need to retrieve a series of articles from PubMed related to a specific medical terminology. The alternatives are, either utilizing MeSH (Medical Subject Headings) which formally requires you to know the encoding of the concepts, or use the keywords or the wording that is used to mention that concept. Both have limitations that hinder them from being considered state-of-the-art:

- First, keyword-based search engines, which rely on predefined tags (from MeSH), are not optimal for information retrieval. A clear example is PubMed search engine by keywords, where you will find out that there is a lot of ambiguity in which tags were used to annotate the article. You can easily find articles talking about HIV-1 and HIV-2 being tagged sometimes just as HIV, some other times with specific HIV-1 and HIV-2 tags. Even though they may be mentioning HIV Infections, only sometimes they have the HIV Infections MeSH tag. Same happens with HIV Seropositivity. T_his makes searching using keywords a gambling system, as you will be more or less lucky depending on how the article was tagged. If we go to non-medical related concepts. Want to experiment it by yourself? Try to figure out the logic behind using using _Deep Learning vs Neural Networks vs Neural Networks, model vs AI vs Machine Learning …

- Second, code-based search. This is another way of carrying out a search, when you use a code from an ontology instead of the tag/keyword itself. PubMed also supports seraching by MeSH codes. Unfortunately, the problem described above is still there, as inconsistent annotations lead also to inconsistent codes.

One of the solutions is to create complex queries that take into account many different annotating scenarios, trying to encompass all possible ways of annotating a concept that may come across your mind.

Figure 4: query using keywords, combining similar tags

However, this does not feel right at all. Instead, and to address these limitations, there is a growing recognition of the need for more advanced approaches, such as natural language processing and Question-Answering (QA) systems. Shifting towards a more natural language-based approach could enhance the accuracy and flexibility of retrieval systems, allowing users to express queries in a manner that aligns with their understanding and context, thereby overcoming the shortcomings associated with keyword-based systems.

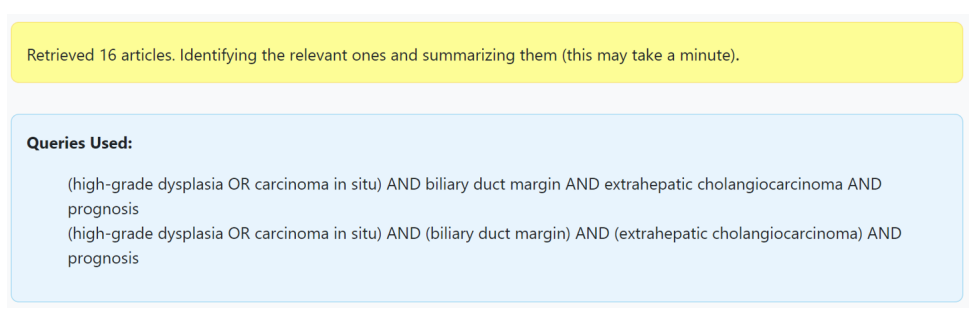

One of the digital tools using this approach is clinfo.ai. This project, developed by Stanford researchers and documented in this publication, helps retrieve information from Medical Literature, as PubMed and Semantic Scholar, without need to do code or keyword-specific queries.

In fact, it can retrieve the queries for you in case you want to continue your work with the query terms:

“I want to retrieve articles mentioning concepts as displasya (high-grade) or carcinoma, which also mention some other concepts as biliary duct marging and …”

Figure 5: Transforming NLP queries into code-based queries for literature research engines

Powered by Chat GPT, it also summarizes the most important information for the relevant articles and provides full support for question-answering.

Figure 5: clinfo.ai proposed solution

Conclusion

In summary, this article illuminates the significant impact of Language Models (LLMs) on the Healthcare Research sector, revealing a transformative wave that extends across various dimensions. Through a comprehensive exploration, it becomes evident that LLMs, acting as Research Assistants and natural language-based Retrieval Systems, play pivotal roles in literature retrieval, cross-referencing, citation management, paper publication automation, data mining based on human language queries, abstract creation, and even proofreading. This wide-ranging application of LLMs underscores their versatility and efficiency in streamlining research processes, offering researchers valuable support in navigating the complexities of healthcare research. As the article demonstrates, LLMs are not just tools but indispensable allies in advancing the landscape of healthcare research.

Need help?

If you want us to guide you through the implementation of LLMs-based engines for the Healthcare Research industry, our experienced team of NLP engineers with background in healthcare will be happy to help. Just reach out to us at hi@mantisnlp.com

Applications of LLMs in Healthcare Research was originally published in MantisNLP on Medium, where people are continuing the conversation by highlighting and responding to this story.