How we are thinking about Generative AI: Costs and Abilities

Categories:

We’ve written a couple of previous blogs giving our perspectives on generative AI (part 1 and part 2), but given how quickly things are moving, it’s time for an update.

Below, we’ll look at why generative AI models are becoming more cost-effective, more capable at a wider range of tasks, and how they are moving towards operating more autonomously.

Cost efficiency

Larger training datasets

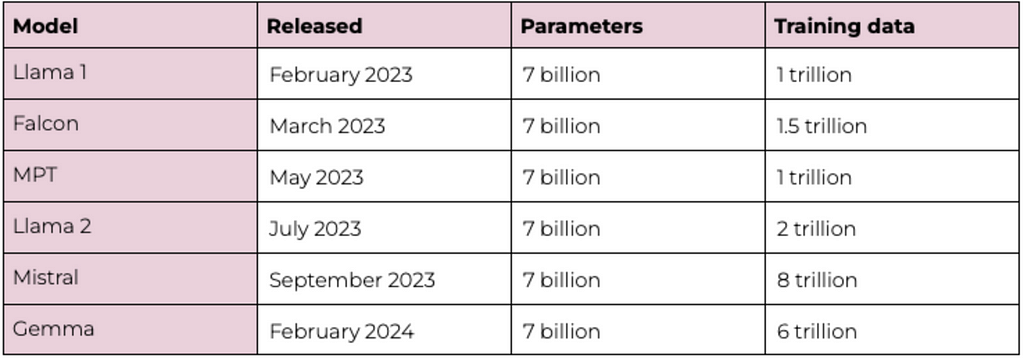

The rapid progress of large-language models (LLMs) has, until recently, been driven by making them bigger — increasing their number of parameters. Recently, we’ve seen this start to change, with newer models reaching higher performance levels by being exposed to more data.

Parameters represent the internal relationships within an LLM, in much the way that the neural network in the human brain works. But, like the human brain, the size of this is only part of the picture: to learn, they need to be exposed to stimuli — in the case of LLMs, that’s video, audio and textual information.

For comparison: GPT-3, released in May 2020, had 175 billion parameters — more than twice the number of Meta’s largest Llama model, released in April this year, with 70 billion. But the difference in the size of the training data is vast — Llama 3’s encompassed 15 trillion tokens, 50 times the size of the GPT-3 training data.

And this is a trend we’ve seen with some other recently released models, most notably with Google’s Gemma, which has relatively few parameters, at 7 billion, but a large training dataset of 6 trillion tokens. Mistral, too, comes in at 7 billion parameters, but with an estimated 8 trillion tokens of training data.

All of this means that these models are cheaper to run, but matching larger LLMs for performance — undoubtedly a good thing, and one which will allow for wider application of the models, more economically.

Domain-specific LLMs

It’s not long since domain-specific LLMs were gaining traction — the likes of BloombergGPT, the media and data giant’s finance-focused model (which cost millions to train), Google’s medical LLM Med-PaLM and ClimateBERT, a model pre-trained on climate-related research papers, news and company reports.

But we think the tide is turning a little on these, lowering the barrier to entry for many companies, as generalised LLMs with innovative prompts can unlock specialist capabilities which outperform domain-specific models.

An example of this is MedPrompt, which uses a composition of prompting strategies to steer GPT-4 to high scores on medical benchmarks — both outperforming Med-PaLM 2, and reducing errors in responses.

Fine-tuning

Tuning LLMs to specific tasks and making them more efficient to run on any device are also trends that are likely to continue, as demonstrated in Apple’s plans to integrate AI into their devices later this year.

Given the storage and computing power required to operate larger models, running them on everyday devices would be impractical. Which is where LoRA adapters come in — a fine-tuning process which allows the performance of a model with billions of parameters to be near-replicated with far fewer. By stacking these adapters, performance levels are similar to those of previous generations of models, and achieved economically.

This is what allows Apple to have responsive, limited AI functionality on its devices, with a larger, more capable server-side model for more complex tasks.

Greater capabilities

While interacting with LLMs in ways other than text has previously been possible by joining different technologies together — for example, using speech recognition software, LLMs and synthesised speech for verbal interaction — the shift towards models which can do it all continues apace.

The most prominent example of a multimodal model is GPT-4o, Open AI’s version announced in May, which can process and generate text, images and audio.

One of the advantages of multimodal models may again come in the training — as an LLM’s comprehension of the relations between words and their context may, for example, improve the way models can understand relations between images, sound and the wider world.

Human in the loop

We are starting to look towards the point where LLMs will be able to augment humans’ capacity for much more complex workflows than they can currently handle.

A good example is a copywriter using AI to draft texts. A future workflow could look something like this:

- The copywriter consults an AI system about the content they want in the blog, as well as the tone, length, intended audience, etc.

- Once the above is finalised, the AI turns it into an outline blog structure which the copywriter reviews and edits.

- Once that is ready, another AI does the required research from verified sources, fleshing out the brief with knowledge and identifying areas that require more input from the copywriter.

- The copywriter reviews and edits the draft using the AI to further steer the direction, always reviewing its actions.

- As this iterative loop is happening, another AI proofreads and highlights any grammatical mistakes or deviations from the tone and audience specified in the beginning.

- The copywriter reviews the final draft and publishes.

Now, this example mostly deals with text, but when combined with the multimodal potential of newer models, it’s clear to us that the potential to build human in the loop systems harnessing the power of generative AI

Conclusion

The pace at which generative AI keeps developing means we are likely to be back with another update to this series soon. In the meantime, if you need any help or want to get a better understanding of what benefits NLP and AI could bring to you, get in touch.

How we are thinking about Generative AI: Costs and Abilities was originally published in MantisNLP on Medium, where people are continuing the conversation by highlighting and responding to this story.